Once you are confident in your understanding of AI fundamentals within an in-house legal environment, it’s time to get prepared. To ensure that your AI investments pay off over the long term, it's worth taking the time to get your house in order.

A strong AI preparation strategy should focus on three key elements: managing risk, identifying your opportunities, and making your data shine.

Let’s explore each in turn.

As established use cases continue to mature, there’s never been a safer time to invest in proven AI tools. However, some remain understandably cautious about adopting them into a legal environment.

According to Accenture, the single biggest barrier to AI adoption for businesses in 2024 is “managing data ethics and responsible AI, data privacy and information security”. This is a particular concern for legal counsel, who are held to professional standards of conduct and shoulder the responsibility of safeguarding their organizations against risk.

For some legal teams, fear of risk exposure can quickly lead to adoption paralysis. However, the good news is that by taking a strategic approach to your use cases, vendor choice and deployment from the outset, the potential pitfalls of AI can be easily managed.

The most successful AI adopters are those who take a “responsible by design” approach – in other words, they treat lawful, ethical and responsible use as a primary driver of their AI strategy. By setting guardrails from day one, they stay on the right side of compliance, safeguard their businesses and stakeholders from reputational damage, and future-proof their AI strategies over the long term.

With this in mind, here are the three biggest pitfalls that legal teams face when using AI for the first time – and how to safeguard against them.

Let’s get this one out of the way first, as it’s the most infamous risk associated with GenAI tools: the tendency of some LLM models to “hallucinate”, or in other words, to fabricate plausible-sounding but false information.

Recent studies have made it clear that AI-powered legal research tools still have a tendency to hallucinate. This issue has led to some serious incidents within the legal profession – most involving the unsupervised use of chatbots (like ChatGPT) for substantive legal work.

Without rigorous benchmarking and a robust system to verify accuracy of outputs, these tools can pose a higher risk in a legal environment. However, there are a lot of AI tools which are unaffected by this issue, and for those that aren’t, simple steps can be taken by users to avoid the risk of hallucinations.

As we increasingly rely on technology to manage our in-house legal workflows, it’s never been more critical to safeguard the highly sensitive data that we process on a daily basis on behalf of our organizations.

Because AI tools work best by processing high-quality data at scale, they can introduce a heightened risk of data breaches if guardrails are not installed.

One of the biggest mistakes that lawyers can make when using AI for the first time is to rely on tools which compromise sensitive or proprietary data (usually because they use this information to train their public models).

The consequences of this can be serious. As well as putting your business at risk of exposing commercially sensitive information, it could also put you in breach of data protection regulations (such as the GDPR, CCPA and HIPAA).

With regulations on AI systems still in development, ticking this box can feel particularly tricky. Here’s how to manage it.

The last critical risk to be aware of when working with AI in an in-house environment is – you guessed it – yourself. Like all technology, AI tools are ultimately deployed by humans, and no amount of product development can completely eliminate the risk of poor end use.

This sentiment is echoed by the American Bar Association, who have created a specific duty of competence for lawyers who use AI in their work. In addition to the standard ethical duty of confidentiality,

"Lawyers using GAI tools have a duty of competence, including maintaining relevant technological competence, which requires an understanding of the evolving nature of GAI.”

Deploying the wrong kind of AI for your needs (or deploying it without appropriate guardrails, training, or oversight) can increase the likelihood and impact of more material risks like hallucinations and data security.

Being aware of the biggest risks that come with AI is key – but to give clear direction to your adoption strategy, it’s equally important to identify how AI can help you deliver on your wider technology goals.

AI tools are multi-faceted, and can be applied to enhance multiple aspects of legal work. From NLP and ML to RPA and predictive analytics, AI can help you achieve greater efficiency, productivity, and strategic advantage. These advantages are amplified when you integrate multiple types of AI into your work.

Here are some of the most common applications of AI for in-house legal teams – and how each can help you in your daily work.

From streamlining workflows to enhancing data and analytics, there’s a lot that AI tools can help lawyers with in an in-house environment.

To determine your best path to adoption, you’ll need to identify which AI use cases will deliver the most high-value results for your team.

The first step is to identify the core outcomes that you want to achieve from your overall legal technology (for example, improving efficiency) so that you can find the right AI tools to amplify it. In an ideal world, you’ll choose a product which does this all at once.

Next, it’s time to identify the workflows that are causing the biggest pain points in your team – and determine whether they are strong candidates for AI.

Keeping these two criteria in mind will help you identify the most suitable tools for your needs when you go to market.

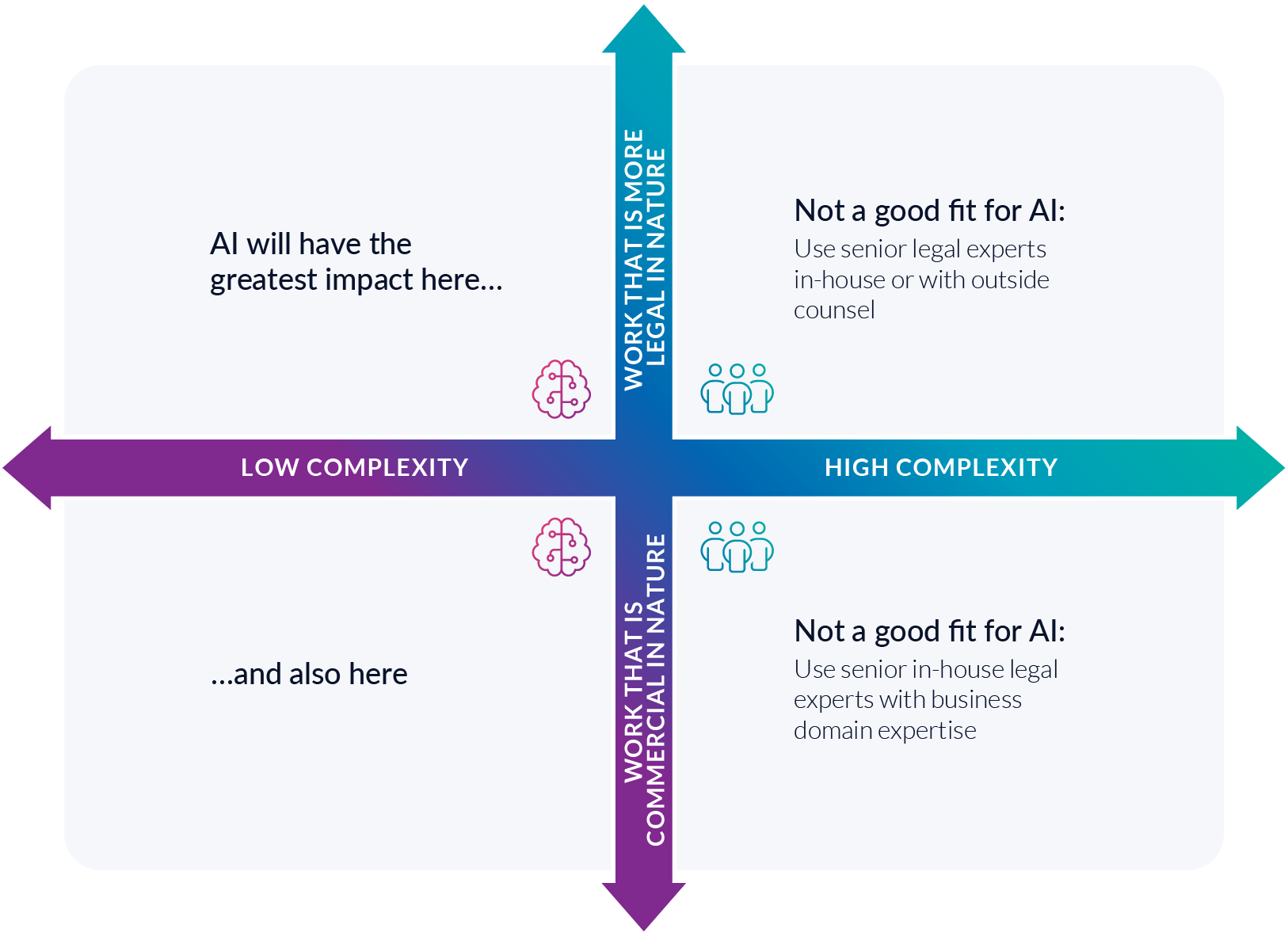

Here's a simple model you can use to identify opportunities to safely leverage AI in an in-house environment.

Another easy way to think about it is this: If you find yourself answering yes to all of the following questions, you’ve probably found yourself a “no-brainer” AI use case.

There’s a final factor which is easy to overlook when preparing to adopt AI for the first time – the critical role that your data will play in determining the success of your chosen tools.

What is “data”? For the purposes of this guide, data is simply all the information that sits beneath, and is generated by, your team’s daily legal workflows – from contract terms to the figures that make up your legal spend.

Whether AI-powered or not, data is the backbone of most legal technology, and the relationship between the two should be symbiotic. Legal tech tools can be used to centralize data – which, in turn, fuels them to deliver stronger outcomes.

Data is most powerful when it is cohesive and easy to use. However, because it’s generated from multiple sources as a product of your daily workflows, it can easily become fragmented (for example, stored across multiple email inboxes and spreadsheets) and difficult to oversee.

One of the biggest advantages of many legal technology tools is their ability to consolidate your data into a “source of truth”: a central data repository within which all information can be stored, organized, searched, and shared.

CLMs, matter management systems and e-billing tools are all examples of tools which offer this capability (and which work more effectively because of it), but they only do so in relation to specific workflows.

When your legal tech tools are powered by AI, their relationship with your data shifts to critical. The importance of a high-quality source of truth becomes greater than ever – but the task of building and leveraging it also becomes exponentially easier and more valuable.

The right AI tools can amplify the power of your data in three ways:

By putting the power of AI behind your data and building a unified source of truth, you’ll create a self-sustaining data ecosystem which will grow in value over time.

And to supercharge it even further, you can choose a tool which lets you achieve this across all of your legal work – all at once.

While consolidating your data within an individual workflow (for example, a CLM or e-billing platform) has huge benefits, a truly unified source of truth, such as a legal workspace, has even greater value as a central repository for all your work – particularly if it’s powered by AI.

One of the biggest challenges with multiple point solutions is inefficiency. The more tools you’re working with, the more time you have to spend navigating between them.

This extends to your data, too: while far better than relying on email and spreadsheets, even a well-unified point solution will still be limited to the data generated by that workflow.

However, by consolidating all of your data from across your legal work into one truly unified source of truth, you’ll dramatically elevate the impact of your AI tools.

For example: An AI-powered search tool is more powerful if it’s able to search across a complete repository of contracts and matters, because it’ll deliver more context and useful information in less time. An AI-powered analytics tool will be more efficient, comprehensive, and insightful when it can answer questions and track KPIs across all of your team’s work at once.

As you start to evaluate AI tools, it will pay to keep the critical relationship between data and AI-powered legal technology top of mind.

How you carry this into your adoption strategy might depend on where you’re currently at in your legal tech journey. To hone in on the scope of your ideal solution, start by thinking about where your data is currently stored:

As you'll be starting from a blank canvas, now is the perfect time to consider a comprehensive solution which will cater to your longer term needs.

You could start by exploring the AI capabilities offered by your existing vendors – but it’s also worth thinking about how a consolidated solution might be more manageable and efficient, and let you create a unified source of truth that amplifies the power of AI beyond specific workflows.

Whatever the state of your current tech stack, to leverage the reciprocal relationship between data and AI to its full potential you should now be thinking about AI as an essential component of a dedicated legal tech solution – and, ideally, as part of a consolidated platform (such as a legal workspace).

With this in mind, your next step is to choose the right tool for the job.